What are Prediction Explanations in Machine Learning?

Prediction explanations in machine learning explain how or why an AI platform arrived at an outcome. In the past, these models did not explain how a decision was made. This lack of clarity causes the “black box” syndrome, where you have predictions but are unsure of which feature variables affect a model’s outcomes.

Prediction explanations in machine learning explain how or why an AI platform arrived at an outcome. In the past, these models did not explain how a decision was made. This lack of clarity causes the “black box” syndrome, where you have predictions but are unsure of which feature variables affect a model’s outcomes.

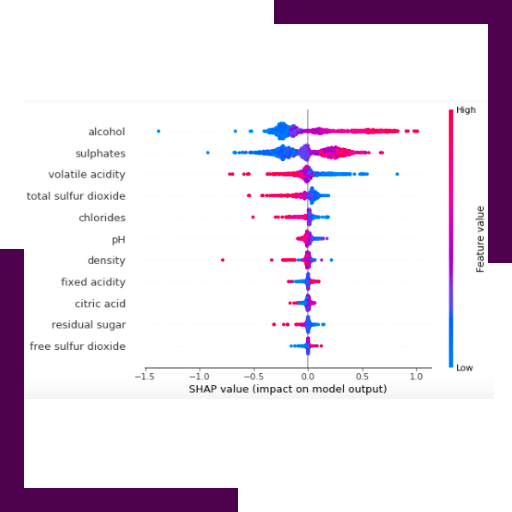

Machine learning models learn how to make decisions based on rules that it created while analyzing the training dataset. Prediction explanations allow us to understand these rules and how they are applied to new data, as well as what features are the most valuable considerations in determining the output.

The prediction explanation assigns each input value a measure of importance, and if you take the sum of all of them, it will add up to 100%.

For decision tree models, the prediction explanation follows the prediction path. Still, for others like regression, it is calculated based on aggregating the results of many predictions that use random variations of input data.

Why are Prediction Explanations Helpful?

Understanding how your AI platforms interpret feature variables to arrive at predictions is essential to making data-driven decisions. By learning what inputs most contribute to the outcomes, you can gain insights into what is driving things like consumer trends or revenue growth.

Prediction explanations are required by regulations when machine learning models are used in industries like finance. Loan issuers must explain how they arrived at a credit decision so that they can prove it was fair – and provide support for why they declined a loan application.

They are also crucial in healthcare, as medical professionals must provide explanations for diagnosis. This is the only way that doctors can be confident enough to make a life-or-death decision, and so that they can convey that decision path to the patient and their family.

Who Can Benefit from Prediction Explanations?

It doesn’t matter if you only have BI analysts, IT leaders, or executives wanting to leverage prediction explanations or if you have a team of in-house data scientists looking to be more impactful with their analytic output. Any organization looking to start or advance their AI journey can benefit from prediction explanations.

Prediction Explanations and LogicPlum

At LogicPlum, our goal is to make machine learning models and AI platforms available to businesses of all sizes. We want to help you utilize these models to gain valuable insights about trends in your industry, consumers, and which decisions should be made.

To ensure you fully understand the outputs of our models, we provide prediction explanations so that you can calculate the impact that certain features or variables have on your outputs. You will have access to a qualitative indicator that shows the strength of the explanation, and whether it had a negative or positive influence on the outcome.