What does the Central

Limit Theorem say?

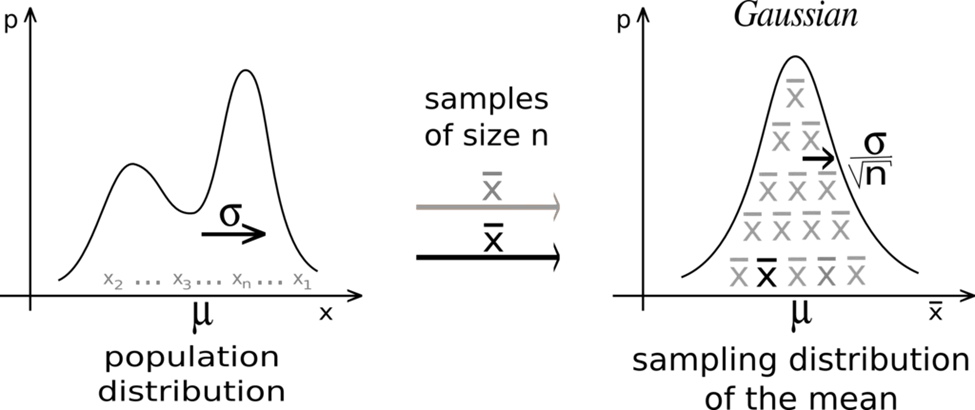

The Central Limit Theorem states that the sampling distribution of the sample means approaches a Normal distribution as the size of the sample increases

In other words, the theorem says that no matter what the actual distribution of the population is, the shape of the sampling distribution will approach a Gaussian or Normal distribution as the size of the sample increases.

In practice, this theorem can be applied when the size of the sample is 30 or larger. However, there are cases where this heuristic is not valid and larger samples are required.

This theorem was first proved by the French mathematician Pierre-Simon Laplace in 1810, and the Gaussian function’s name honors the German mathematician Carl Friedrich Gauss, who discovered it.

Figure 1: Central Limit Theorem

Source: Mathieu ROUAUD, CC BY-SA 4.0 <https://creativecommons.org/licenses/by-sa/4.0>, via Wikimedia Commons

Why is the Central Limit Theorem valuable?

The Central Limit Theorem has many applications, particularly in modeling, where it can be used to assume a bell-shaped distribution for certain features. This is because we can regard a single measured value of a feature as the weighted average of many small different features. In general, the higher the number of independent variables equally affecting a feature, the more its distribution will approach a bell curve.

There are many other applications of this theorem. For example, in regression, the Ordinary Least Squares method assumes a normal distribution for the error term by considering it to be the sum of many independent error terms.

This theorem has been proven to be valid for certain neural networks by several authors [1]. Moreover, it is one of the foundations for significance tests and confidence intervals, and thus for model comparison.

Central Limit Theorem and LogicPlum

LogicPlum’s platform uses automation to train and test hundreds of different models. The foundations of many of them include the Central Limit Theorem. Among them, Ordinary Least Squares and comparison methods for neural networks. In addition, the theorem is used in certain cases to approximate the properties of certain features.

Additional Resources

- Sirignano, J and Spiliopoulos, K. Mean Field Analysis of Neural Networks: A Central Limit Theorem. Available athttps://arxiv.org/abs/1808.09372#:~:text=The%20central%20limit%20theorem%20is,around%20its%20mean%2Dfield%20limit

- jbstatistics (2012). Introduction to the Central Limit Theorem. Available athttps://www.youtube.com/watch?v=Pujol1yC1_A