What Is Conditional Probability?

Conditional probability is the probability that an event A occurs given that another event B previously occurred. It is often stated as the probability of A given B and denoted by P(A|B).

Conditional probability is the probability that an event A occurs given that another event B previously occurred. It is often stated as the probability of A given B and denoted by P(A|B).

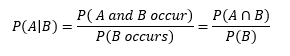

Conditional probability requires that there is no causal relationship between both events and that they don’t happen simultaneously. Mathematically, it is defined as (where P denotes probability):

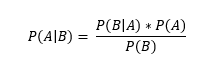

As it is difficult to directly calculate a conditional probability, statisticians usually use the Bayes’ formula. This equation – developed by Reverend Thomas Bayes in the eighteen century – states that:

P(A|B): Conditional probability. The likelihood that A will happen given that B is true.

P(B|A): Conditional probability. The likelihood that B will happen given that A is true.

P(A) and P(B): Probabilities that A and B occur respectively. They are also known as marginal probabilities.

This formula applies when P(B) ≠ 0.

However, it is essential to differentiate conditional probability from joint probability, as the latter is the probability of both events happening simultaneously, not sequentially.

Why is Conditional Probability Valuable?

Conditional probability is vital in a wide range of fields, such as classification, decision theory, prediction, and diagnostics. This is because classifications, decisions, predictions, diagnoses, and other similar events are based on past evidence.

Bayes’ formula becomes handy in these cases because the probability of the condition is much easier to calculate from past events. For example, in classification, given the Bayes’ formula, we can calculate the conditional classification probability of an event given the probability of the evidence that the event belongs to a particular class.

Conditional Probability and LogicPlum

Conditional probability plays a significant role in many of the calculations done by LogicPlum’s platform. It is considered in classification, pattern search, forecasting, and more. It is also based on Bayesian inference, which can be used in neural networks to calculate probabilities overweights.

Other uses include creating a probability framework for fitting a model to a training set (called Maximum a Posteriori or MAP) and classifiers such as the Bayes Optimal Classifier and Naïve Bayes, which have applications in diverse fields such as financial forecasting and artificial vision.

Additional resources

- Investopedia: Conditional Probability. Available at https://www.investopedia.com/terms/c/conditional_probability.asp