What Is SMOTE?

SMOTE or Synthetic Minority Oversampling Technique is an oversampling method used to correct imbalanced data. Imbalanced data refers to the case where classes in a dataset are not represented equally. It can be mild, moderate or extreme, depending on the relationship between the majority and the minority classes. Imbalance data has negative effects, as it creates a bias where the machine learning model tends to favor the most populous class.

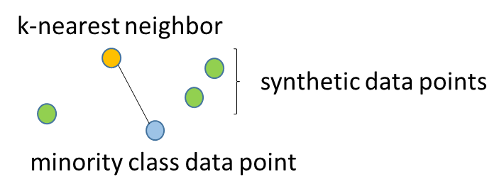

SMOTE works by adding extra data. First, it chooses random data from the minority class. Then, it creates extra data values, called synthetic data points, by utilizing the k-nearest neighbor method. The procedure is repeated several times until there is a balance between the majority and the minority classes.

Figure 1: SMOTE’s data addition technique

SMOTE was proposed by Chawla, Bowyer, Hall, and Kegelmeyer in 2002, and since then, it has proven its effectiveness in several areas. However, its full effect in big data sets has not been entirely investigated yet.

Why is SMOTE Important?

SMOTE is particularly important in machine learning as it provides a better approach to oversampling than the traditional one. Usually, oversampling was done by replicating (weighing) data points from the minority class. However, these repeated points provide no extra information to the machine learning algorithm and offer a basis for overfitting. SMOTE, on the contrary, creates different data points that are close to the actual ones, and thus, provides some extra information for the training algorithm.

SMOTE + LogicPlum

SMOTE is a technique that when properly applied can improve a model’s performance. However, its usage requires proper knowledge of statistics and machine learning techniques.

LogicPlum’s platform eliminates this need by providing automated calculations. As a result, its users can benefit from techniques such as SMOTE without having to know them at an expert level. In this way, they can concentrate on what they know best, which is their fields of knowledge, and focus on interpreting results and forecasting new events.

Guide to Further Reading

For those interested in reading the original publication by Chawla et al:

Chawla, N et al. SMOTE: Synthetic Minority Over-sampling Technique. Journal of Artificial Intelligence Research 16 (2002) 321–357. Available at https://arxiv.org/pdf/1106.1813.pdf