What is an Epoch in Machine Learning?

An epoch is a term used in machine learning that defines the number of times that a learning algorithm will go through the complete training dataset. Thus, it is a hyperparameter of the learning algorithm.

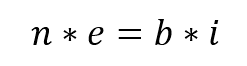

When the dataset is very large, it is usually divided into batches. Therefore, if the batch size is b, the number of iterations is i, the epoch is e, and the size of the dataset is n, then the relationship between them is

Theoretically, the number of epochs can vary from zero to infinity. In practice, this number is usually large, in order to run the algorithm until the error term is small enough. Common values are 10, 100, 500, 1000, and greater.

Why is the Epoch Important in Machine Learning?

Epoch plays an important role in machine learning modeling, as this value is key to finding the model that represents the sample with less error. Both epoch and batch size has to be specified before training the neural network.

Specifying both parameters is more an art than a science, as there are no specific rules for how to select their values. In practice, data analysts must try different values until they find what works best for a specific problem.

One way to find the right epoch is to monitor learning performance by plotting their values against the error of the model in what’s called a learning curve. These curves are very useful to detect when the model is ovefitting, underfitting, or is suitably trained.

Epoch and LogicPlum

Creating machine learning models requires skill and expertise. Learning to find the correct values for epochs and batches takes time and practice, particularly with the ever-increasing amount of algorithms and technologies continuously appearing on the market.

For LogicPlum’s users, this is not a problem; as all calculations, estimations, and optimizations are done in an automated manner. As a result, this technology allows users to concentrate on what matters most: the use and interpretation of the model, leaving all mathematical and statistical considerations to the platform.

Additional Resources

- Sharma, S. (2017). Epoch vs Batch Size vs Iterations. Available at https://towardsdatascience.com/epoch-vs-iterations-vs-batch-size-4dfb9c7ce9c9